roses are red violets are blue

What is your data not telling you?

roses are red violets are blue

an independent European data science lab that specializes in Artificial Intelligence solutions for business.

Having accumulated considerable experience providing services to big-league corporate clients, we’re a start-up no more. But we keep the feel.

Our in-house team is constantly pursuing the next big thing with our clients, helping them improve, grow and innovate. We know what your data is not telling you, and we’re happy to share our knowledge and experience, preferably over a cup of coffee.

we excel at all things AI

Check out some of our past work and see what’s possible

See how we work

nPowered Sp. z o.o.

ul. Płocka 5A

01-231 Warsaw

KRS 0000568653

Tax ID 527-274-12-27

REGON 362126841

nPowered Sp. z o.o.

ul. Płocka 5A

01-231 Warsaw

KRS 0000568653

Tax ID 527-274-12-27

REGON 362126841

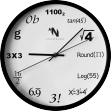

Our math is nothing like the math you know. Our math is the mathest.

We solve unorthodox problems that require special weapons and tactics on a daily basis. We find solutions to problems that are too complex for experts, and engage in business modeling so advanced that tools much stronger than Excel will run out of steam.

That is why we keep in our armory neural networks, recommender engines and evolutionary algorithms, and discuss swarm intelligence, gradient methods, kernel density estimation, or random forests over lunch.

After lunch, we turn them into real-life business solutions which, thanks to the crazy advanced mathematics, are at the same time cutting-edge and bullet-proof.

Mathematics is also our secret business weapon as we walk our clients through every decision, validate the results and help them understand where the optimum lies.

create

createThe early stage of our process always requires close interaction with our client: workshops, data discovery visits, brainstorming sessions and coffee. Lots of coffee. This way we can make sure our solution meets the highest standards and lives up to even the most demanding of expectations – theirs and ours.

prototype

prototypeHere, we turn the concept into a living reality. We review the quality of data and the accuracy of the calculations in practice. At this stage, we can already measure predicted project outcomes. It is great for the clients too, as it introduces an early check-point, reduces risks and demonstrates value.

develop & test

develop & testOur development is a direct and interactive process, always client-centered, flexible and driven by our agile approach to scope management. Testing, on the other hand, is fierce and merciless.

support & maintain

support & maintainWe’re not done when we’re done. We provide full support and maintenance, from resolving any technical issues and answering business queries, to scaling up and adding additional features.

We build user-friendly tools with excellent UX and UI design, because our software is meant for the business user, not a rocket scientist.

Although we are happy to work with popular analytics packages, most of the solutions we develop are based on our own proprietary software, tailor-made for the given problem. Relying on our very own collection of libraries, we can build anything. The sky is hardly the limit.

Our usual weapon of choice is Java and open source software, but we cherry-pick the technology for the optimal effect and to cater for individual needs. We are happy to use R, Hadoop or Solr, if you prefer, but we will also tell you why and when one should not.

When we design our tools, from the get-go they are created with scalability and expandability in mind. Our software can perform great on average workstations, small servers or large clouds, as needed. The most important, however, is to keep the development process simple and the code clean. This way, even the most complicated problems can find agile solutions.

Code clean, or die (trying)

Our client, a leading European mobile operator, was in the process of spectrum / scenario valuation before an 800/2600 auction. Due to very high expected costs of the 15-year frequency reservations, the client sought a world-class AI solution that would challenge and support their in-house strategic decision-making process.

We delivered a tool to calculate band/scenario valuation based on a long-term DCF analysis, comparing a set of machine-generated, optimal and constrained network strategies under different auction outcomes. We built a comprehensive, cell-level telecommunication network simulator, covering in detail all parameters determining the behavior of a mobile network, including radio technologies, frequency assets, base station capacity and wave propagation. We captured the logic in user terminals and network traffic control systems in order to create a macro-level solution that is still realistic even on the level of a single base station. The optimization module automatically identified the optimal strategy for network development for each set of user-selected boundary conditions, which included coverage requirements, service quality, shared network costs, or the maximum speed of network roll-out.

Given the enormous computational complexity, we used Java to create a dedicated software solution, and based the optimization technique on evolutionary algorithms combined with constraint modeling, supported by a set of heuristics which facilitated finding the global optimum for the objective function. The built-in segmentation methods ensured avoiding the pitfalls of local minima. The tool was also designed to run on a cluster in order to reduce the calculation time, while using off-the-shelf equipment.

EUR

Over

2.2 billion

total auction value

2 million

scenarios in each run

6

desktop PCs

EUR

2.2 billion

total auction value

Over

2 million

scenarios in each run

6

desktop PCs

Our client sought a solution to automate the retail and wholesale price management process for a large countrywide retail chain. Both the pricing algorithm (that determined which components affect, and to what extent, the price of the product), and the constraints that had to be respected (product pricing assumptions and the minimal price differences), required by the client made the optimization problem extremely complex, and to be honest - terribly interesting.

We created a tool to automate and simplify the process of price management in the organization. Our mechanism took inputs directly from database dumps and data warehouses. It presented the users with the results in a clear and user-friendly manner. What’s more, it fully supported the process of adding manual corrections by different members of the organization, at the same time presenting how the changes influence the monitored KPI.

We prepared a solution consisting of a multi-threaded mathematical engine implemented in Java, combined with a web-based user interface integrated with MS Excel for user convenience. The essence of the algorithm used is constrained optimization - the software must deal with often conflicting constraints introduced by the user, mainly by ensuring optimal distribution of such non-compliance. The algorithm was based on gradient methods.

Over

Over

Over

80 million

data points per calculation

4000

SKUs

1000

stores

Over

80 million

data points per calculation

Over

4000

SKUs

Over

1000

stores

Our client (an outsourcing company) was looking for the best allocation of its 30+ maintenance centers, providing services at over 5,000 locations throughout the entire country. According to the protocol in place, each location required a certain number of prescheduled maintenance controls and utilized resources such as tools, devices and the work of trained personnel. Our task was to reduce a specific cost function, and determine the minimum number of service facilities that would ensure the adequate and cost-effective maintenance at all serviced locations.

Thanks to the optimization algorithms, we found a solution that reduced the cost function by 24% and cut down the number of the maintenance centers by 23%. All the required SLA parameters and the quality of service were maintained.

We created an operations model, which simulated an entire ecosystem of the client business and their key contracts. We used evolutionary algorithms combined with Self-Organizing Neural Networks, which are a class of unsupervised learning methods. The solution was prototyped in GAUSS, and then migrated to a proprietary Java implementation.

Over

cost function value reduced by

no. of service facilities reduced by

5,000

locations

24%

23%

Over

5,000

locations

cost function value reduced by

24%

no. of service facilities reduced by

23%

Our client required support in a multimillion-dollar legal dispute with an incumbent telecom company over the wholesale access settlements. We were asked to prepare a report, to be presented during the dispute proceedings, assessing the validity of 5 years’ worth of past settlements based on raw source TAP/CDR data, and commenting on the accuracy of the inter-operator billing process under review. In 3 weeks, from scratch.

Yes. We prepared a report presenting the required statistics and evaluating the accuracy of the inter-operator settlements in question. The outputs were based on an advanced record pairing algorithm, reflecting the contractual business billing logic in question, which allowed us to present results for several alternative scenarios. The analysis covered all raw source data (no sampling) provided to us via a DCH - over 5 billion records collected over 5 years stored in over 40,000 TAP files. The ultimate ruling was favorable to our client.

We created a dedicated, custom software solution (Java and R), and performed Call Data Record (CDR) analysis, based on a proprietary mechanism for finding non-trivial dependencies in an entire dataset (e.g. matching corresponding partial records for any relevant network event). We put special emphasis on code optimization, as we aimed for a very short conversion time of a few hours and could not offload the computation offsite. Two independent teams worked on their separate solutions in parallel (Chinese wall), to catch possible errors. The entire process was completed in a very short timeframe of three weeks, with no involvement from the client’s in-house technology team or their hardware/software resources.

5 billion

records

3

weeks

0

client IT resources available

5 billion

records

3

weeks

0

client IT resources available